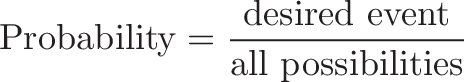

While studying through the excellent Udacity Data Analysis Nano Degree , I found myself struggling to answer the Quiz questions on Bayes Theorem. To help myself comprehend it, I did a fair bit of studying other resources too and I came to the conclusion that it might be a. helpful to write an article myself to help reinforce this difficult subject b. but also help others.

In this article I will articulate Bayes Theorem in a simple manner, and guide with some examples.

What Is Bayes Theorem?

Bayes’ Theorem is a widely used theory in statistics and probability, making it a very important theory in the field of data science and data analysis. For example, Bayesian inference, a particular approach to statistical inference where we can determine and adjust the probability for a hypothesis as more data or information becomes available.

What Are its Applications?

For example, it can be used to determine the likelihood that a finance transaction is fraud related, or in determining the accuracy of a medical test, or the chances of a particular return on stocks and hundreds of other examples for every industry imaginable from Finance to Sport, Medicine to Engineering, Video Games to Music.

What does it Do?

So as we mentioned – Bayesian inference gives us the probability of an event, given certain evidence or tests.

We must keep a few things in the back of our mind first

- The test for fraud is separate from the result of it being fraud or not.

- Tests are not perfect, and so give us false positives (Tell us the transaction is fraud when it isn’t in reality), and false negatives (Where the test misses fraud that does exist.

- Bayes Theorem turns the results from your tests into the actual probability of the event.

- We start with a prior probability , combine with our evidence which results in out posterior probability

How Does it Work? An Example

Consider the scenario of tests for cancer as an example.

Where we want to ascertain the probability of a patient having cancer given a particular test result.

- Chances a patient has this type of cancer are 1% , written as P(C) = 1% – the prior probability

- Test result is 90% Positive if you have C – written as P(Pos | C) – the sensitivity (we can take 100%-90% = 10% as the remaining Positive percentage where there is no C but the test misdiagnoses it – the false positives

- Test result is 90% Negative if you do not have C, written as P(Neg | ¬C) – the specificity (we can take 100%-90% = 10% as the percentage of negative results but there is C but the test misses it – the false negatives

Lets plot this in a table so it’s a bit more readable.

| Cancer – 1% | Not Cancer – 99% | |

| Positive Test | 90% | 10% |

| Negative Test | 10% | 90% |

- Our Posterior probability is what we’re trying to predict – the chances of Cancer actually being present, given a Positive Test – written as P( C | Pos ) – that is, we take account of the chances of false positives and false negatives

- Posterior P( C | Pos ) = P ( Pos | C) x P( C ) = .9 x .001 = 0.009

- While P( ¬C | Pos) = P ( Pos | ¬C) x P(¬C) = .1 x .99 = 0.099

Lets plot this in our table.

| Cancer – 1% | Not Cancer – 99% | |

| Positive Test | True Pos 90% * 1% = 0.009 | False Pos 10% * 99% = 0.099 |

| Negative Test | False Neg 10% * 1% = 0.001 | True Neg 90% * 99% = 0.891 |

But of course that’s not the complete story – We need to account for the number of ways it could happen given all possible outcomes

The chance of getting a real, positive result is .009. The chance of getting any type of positive result is the chance of a true positive plus the chance of a false positive (0.009 + 0.099 = 0.108).

So, our actual posterior probability of cancer given a positive test is .009/.108 = 0.0883, or about 8.3%.

In Bayes Theorem terms, this is written as follows, where c is the chance a patent has cancer, and x is the positive result

- P(c|x) = Chance of having cancer (c) given a positive test (x). This is what we want to know: How likely is it to have cancer with a positive result? In our case it was 8.3%.

- P(x|c) = Chance of a positive test (x) given that you had cancer (c). This is the chance of a true positive, 90% in our case.

- P(c) = Chance of having cancer (1%).

- P(¬ c) = Chance of not having cancer (99%).

- P(x|¬ c) = Chance of a positive test (x) given that you didn’t have cancer (¬ c). This is a false positive, 9.9% in our case.

Resources

- https://eu.udacity.com/course/data-analyst-nanodegree–nd002

- https://betterexplained.com/articles/an-intuitive-and-short-explanation-of-bayes-theorem/

- https://towardsdatascience.com/bayes-theorem-the-holy-grail-of-data-science-55d93315defb

- https://towardsdatascience.com/all-about-naive-bayes-8e13cef044cf

- https://medium.com/@montjoile/easy-and-quick-explanation-naive-bayes-algorithm-99cb5f3f4e9c